WASHINGTON DC – AI may take your job in the somewhat near future — and it may eventually take human life, according to a new report commissioned by the US Department of State.

The State Department commissioned AI startup Gladstone to conduct an AI risk assessment in October 2022, about a month before ChatGPT came out.

The goal of the report was to examine the risk of AI weaponization and loss of control. The corresponding action plan is intended to increase the safety and security of advanced AI, according to an executive summary of the report.

The 284-page report came out on Monday and it detailed some of the “catastrophic risks” associated with artificial general intelligence, a yet-to-be-achieved level of AI which Gladstone defined as a system that can “outperform humans across a broad range of economic and strategic domains.”

Some of the risks could “lead to human extinction,” the report said. An online version includes a shorter summary of the findings.

“The development of AGI, and of AI capabilities approaching AGI, would introduce catastrophic risks unlike any the United States has ever faced,” the report stated.

Gladstone said it incorporated surveys with 200 stakeholders in the industry and workers from the top AI developers, like OpenAI, Google DeepMind, Anthropic, and Meta. It also conducted a historical analysis of comparable technological developments, like the arms race to a nuclear weapon, which factored into its report.

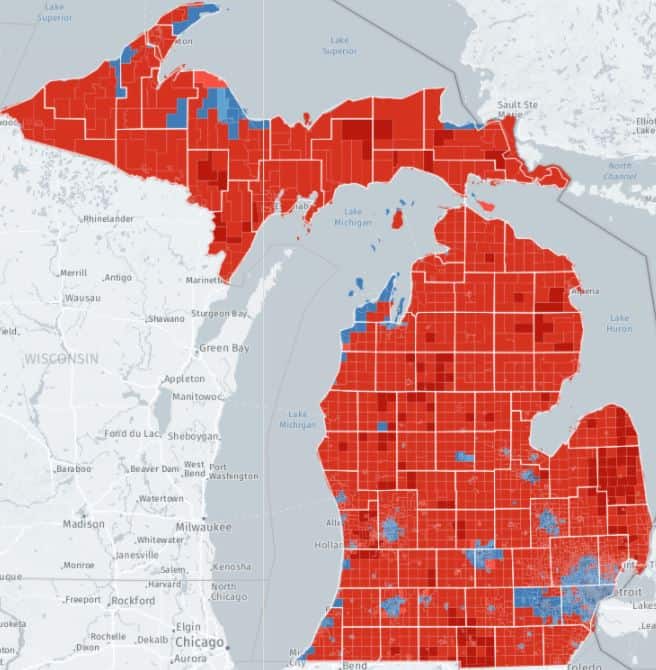

The report concluded that AI posed a high risk of weaponization, which could be in the form of biowarfare, mass cyber-attacks, disinformation campaigns, or autonomous robots. Gladstone CEO Jeremie Harris told BI he personally considered cyber attacks the highest risk, while CTO Edouard Harris said election interference was his biggest concern.

The report also indicated a high risk of loss of control. If this were to happen, it could lead to “mass-casualty events” or “global destabilization,” the report said.

“Publicly and privately, researchers at frontier AI labs have voiced concerns that AI systems developed in the next 12 to 36 months may be capable of executing catastrophic malware attacks, assisting in bioweapon design, and directing swarms of goal-directed human-like autonomous agents,” the report said.

To read what an AI expert said, click on Business Insider