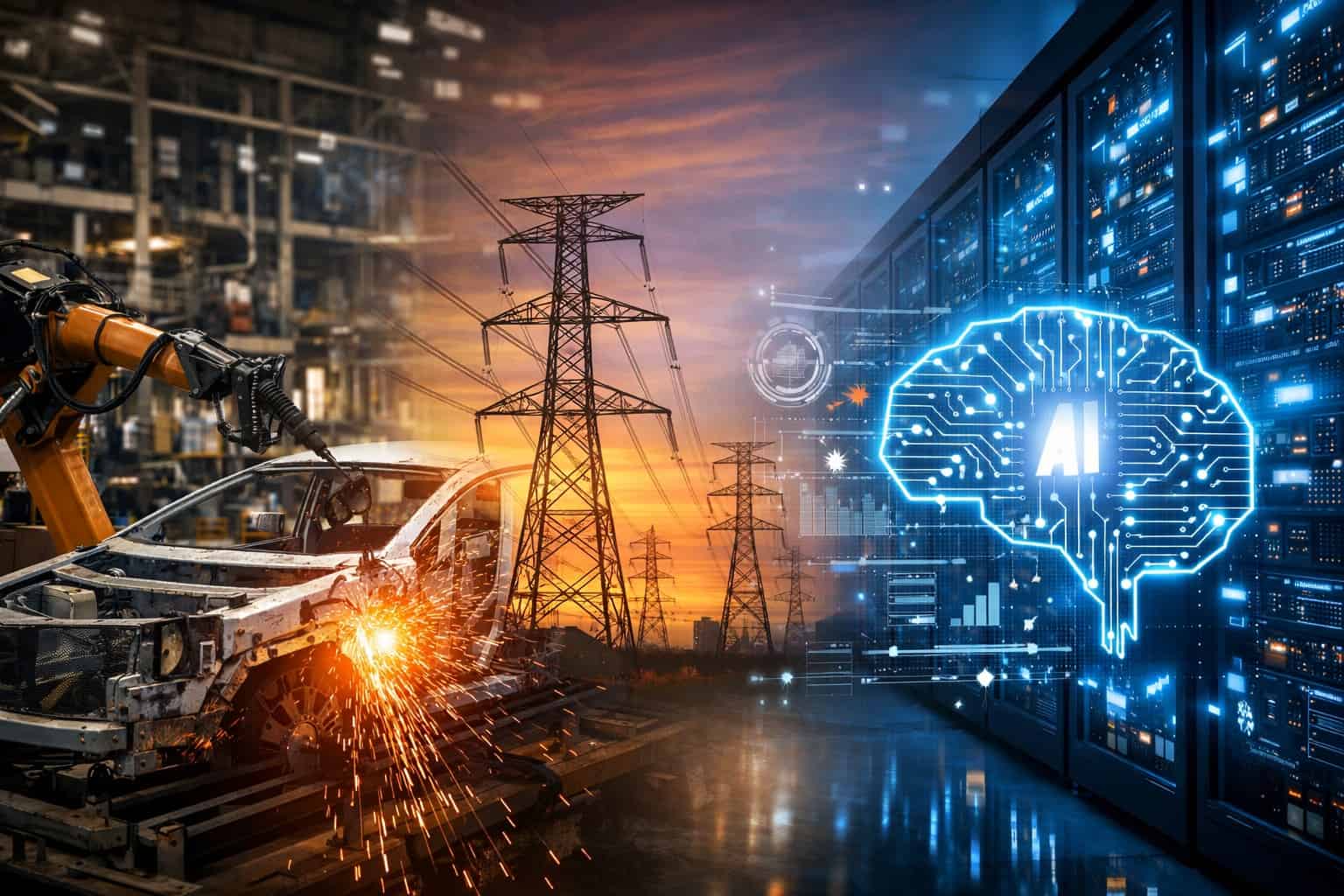

DETROIT – A new industry survey from Universal Robots makes one thing clear: artificial intelligence is leaving the screen and entering the physical world.

After a decade in which AI’s biggest breakthroughs were digital — search, analytics, and generative models — the next phase is about machines that can see, decide, and act in real environments. The survey’s predictions for 2026 and beyond point to a rapid acceleration of what the industry calls physical AI — and they help explain why high-profile efforts such as Elon Musk’s humanoid robot push are suddenly gaining credibility.

For Michigan’s manufacturing- and mobility-driven economy, the implications are immediate.

From Digital Intelligence to Physical Intelligence

Most AI systems today analyze information. Physical AI systems do work.

They combine perception (vision and sensors), reasoning (AI models), and motion (motors, grippers, wheels, or legs) to operate in spaces designed for humans. That distinction matters. A robot on a factory floor must deal with uncertainty — parts aren’t always where they should be, materials vary, and people move unpredictably.

The Universal Robots survey reflects a broad industry shift: intelligence is no longer defined only by what machines know, but by how well they can adapt in the physical world.

Survey Signal #1: Robots Are Becoming Predictive, Not Just Reactive

For decades, industrial robots have followed scripts. They wait for a signal, then execute a programmed move. The survey suggests that model is nearing its limit.

By 2026, robots are expected to:

Anticipate failures before they happen

Adjust grip strength, speed, or trajectory mid-task

Learn from prior outcomes and improve over time

This predictive capability is critical in environments like logistics, advanced manufacturing, and food processing — all major sectors in Michigan — where variability is unavoidable and downtime is costly.

Survey Signal #2: AI Is Being Purpose-Built for Physical Tasks

Another clear takeaway: physical AI is not generic AI.

Instead of adapting large, general-purpose models, robotics firms are deploying task-specific intelligence tuned for manipulation, navigation, and safe human interaction. These systems integrate vision, language understanding, and motor control — allowing robots to respond to high-level instructions rather than rigid commands.

This is why collaborative robots are spreading beyond automotive assembly lines into small and mid-sized manufacturers, where flexibility matters more than raw speed.

Survey Signal #3: A New Data Economy Is Emerging — This Time in the Real World

Digital AI was fueled by text, images, and clicks. Physical AI is fueled by:

Force and torque data